MEET THE ARTISTS: Maria Arnal Dimas

Impossible Larynx – an artistic research on modeling voices

The human larynx can produce an extraordinary range of sounds, much more than those needed to speak. From an evolutionary perspective, this versatility in vocalization, particularly evident in our singing abilities, likely served practical purposes in ancient times. The human voice is an extremely sophisticated tool, the oldest musical instrument and a cross-over of many social constructs, traditionally connected to the expression of feelings and identity. However, what does the concept of a voice involve in the 21st century? How do technological challenges and societal shifts shape its role? And what does it mean for a voice to exist without a physical body or to be associated with a synthetic form?

By training a physically based model of a vocal track, combined with machine learning, we can identify and modify parameters attached to specific areas, technical and expressive singing features, but also hyper-dimension and expand the possibilities of a human voice, creating impossible vocal sounds. Through the exploration of the latent space of a voice, both symbolic and acoustically, we will be able to add a layer of understanding to AI voice generation models. Stretching the physical limits of a body through different trained models, we aim to develop a modular neural tool capable of real time performance, paving the way for innovative approaches to vocal music production.

The integration of multiple voice processing models is an approach that allows a deeper understanding of different voice processing features and their potential synergies. By adding, also, a physically based vocal tract model, we believe that it will provide a more realistic representation of vocal production and a possibility to modulate specific areas that produce sound, adding controllability to this technology. By understanding better the inner structures of these models, we can start tailoring one that suits our needs.

Throughout the work in progress, an evaluation protocol has been created to compare the outcomes of voice conversions across different models and their respective variations. This protocol draws upon the rich and complex tradition of flamenco singing technique, providing a robust framework for assessment. Additionally, a curated selection of a cappella songs from diverse traditional styles has been chosen as inputs, reflecting technical complexity in performance.

Furthermore, special interest has been put in building a diverse yet personal dataset, recognizing its crucial role as the foundation of our trained voice model. Experimenting with various sounds and expressive features remains a constant practice in our ongoing work. Additionally, our research is significantly shaped by understanding of the relationship between body and voice. This understanding influences how we approach voice processing systems and their connection to vocal training and performance techniques.

Developing a neural tool grounded in physical principles and integrating features from various voice processing models, highlights the physical nature of voice production alongside the abstract concept of synthetic voices. Furthermore, voice processing technologies have the potential to enhance accessibility and inclusivity for people with speech impairments or disabilities that might need custom-made solutions.

As a singer and performer, I see the exploration of synthetic voice architectures as an artistic medium and, by exploring these technologies, the project bridges the gap between artistic expression and technological innovation, which I believe creates exciting opportunities for new forms of musical expression and creativity. By exploring these technologies, we can open up new sonic possibilities for listeners and singers, encouraging them to expand their auditory perception beyond their creative practices.

Lastly, our plan involves the creation of a 3D visualization of a vocal tract that dynamically responds to the singing voice in real-time and adds a visual component to the listening experience, creating a multimodal exploration of sound production. This integration of visual and auditory features might enhance listeners’ understanding of the relationship between vocal anatomy and sound, enriching their musical experience, engaging with their vibrant singing bodies and igniting their listening imagination.

Maria CHOIR: reflection and toughts on the project by Maria Arnal Dimas

Main learnings:

“During this phase of the project, I have been learning a great deal about composing with synthetic voices and the various software developed to utilize them. Additionally, as a singer, the desire to dissociate body and voice through technology in this project has offered me new perspectives, both physically and compositionally. The extended collaboration with the scientific team over these months has greatly expanded my knowledge, from sound processing to the internal architecture of the models, as well as collaborative creativity. In conclusion, I feel incredibly fortunate to have had such a strong team supporting me throughout this journey.

Collaboration with AIR partners:

“The work-in-progress with the scientific team has been very consistent and I really feel that we are a great team.”

KNOWLEDGE TRANSFER

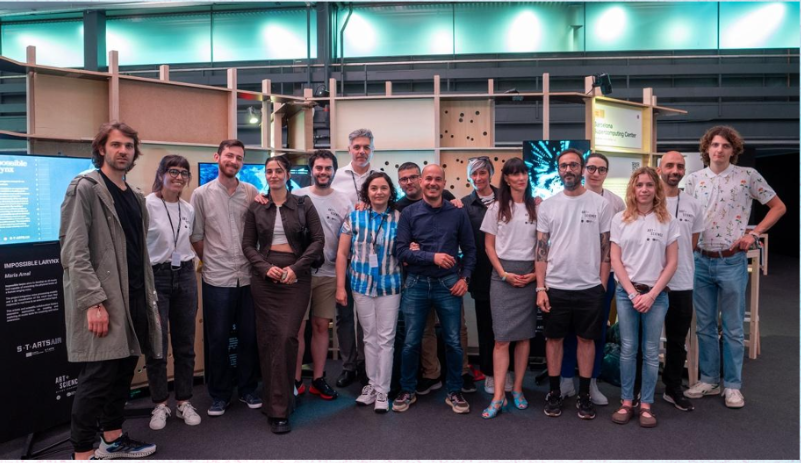

The presentation of the work in progress of the research at the Sónar+D festival invited participants to see the perspectives of the artists and the scientists working together on a joint effort. Centered around the art+science collaboration more than in the actual outcome of the research, the activity presented the opportunities that can open and a methodology for approaching complex problems.

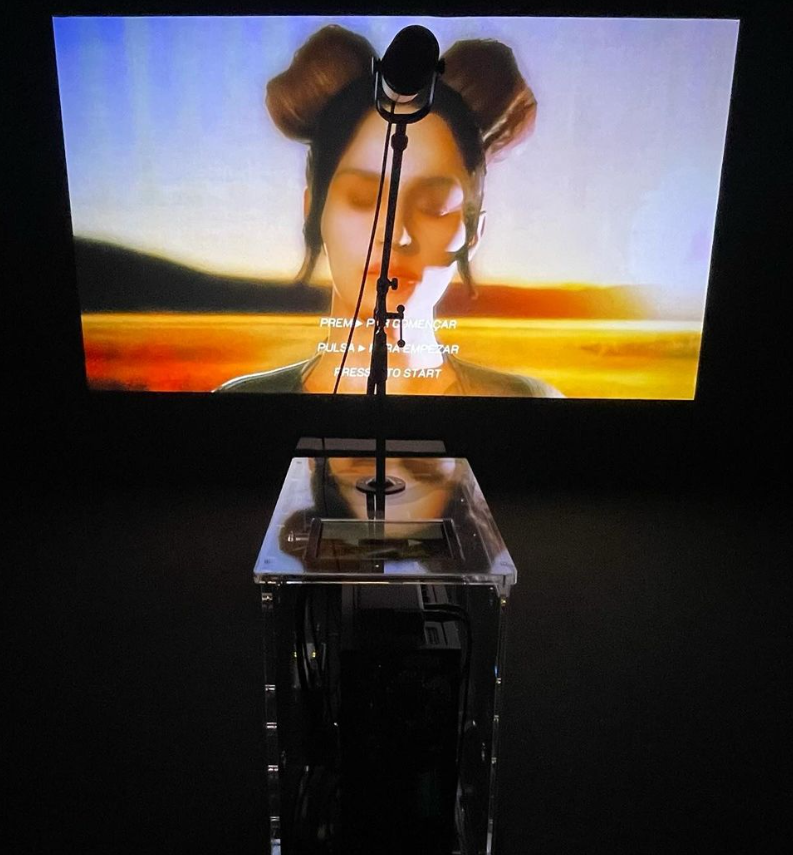

The show consisted of three pieces displaying the current state of the project, and a joint presentation of the artists in one of the conference stages of the festival. María Arnal’s contribution to the booth was an app that took the singing voice of the audience through a microphone and interpreted it to infer the physical configuration of the mouth and vocal tract of the person, displaying the inner probabilities of the model as well as a 3D representation on a screen. The presentation session later focused on the application possibilities and future of the technology.

Event 1 Details

- Event Name: Sónar+D hall

- Date: 13th to 15th of June, 2024

- Location: Fira de Montjuic, Barcelona

Event 2 Details

The presentation of the work in progress of the research at the oppening of the Connectivity Days Conference of the Vic University invited participants to see the perspectives of the artists and the scientists working together on a joint effort. It was a shared presentation between the residence artists Maria Arnal and Sergio Sanchez-Ramírez researcher at BSC, introduced by Francisco Iglesias from Epica Foundation. The Knowledge Transfer Session was centered around the art+science collaboration and the potential of the art-driven innovation, not only for an artistic result but also as a potential tool to be applied in many different verticals, such as the health sector.

The talk included both a formal shared presentation, done between Maria and Sergio, pointing the history of the research, the current status and the potentials of the research and a short demo/performance to demonstrate the potential of the prototype.

- Event Name: Connectivity Days 2024 UVic-UCC

- Location: Fira de Montjuic, Barcelona

This project is funded by the European Union from call CNECT/2022/3482066 – Art and the digital: Unleashing creativity for European industry, regions, and society under grant agreement LC-01984767